Azino777 — очень популярный бренд онлайн казино, предлагает большой ассортимент скриптовых игровых автоматов. Регистрация в Азино 777 позволит играть на реальные деньги. Рабочее зеркало www.azino777.com актуальное на сегодня поможет войти в личный кабинет. Заведение предоставляет всем новым игрокам бездепозитный бонус 777 рублей за регистрацию.

Содержание:

Официальный сайт Azino777

Только необходимая информация представлена на главной странице официального сайта Азино777, оформленной в классическом формате с основными опциями, рекламными баннерами и игровым каталогом. Удобная навигация позволяет быстро освоиться на даже новичкам.

Основная информация:

| Промо предложение | Бездепозит 777 рублей; Кешбек; Подарки на деньи рождения; Индивидуальные бонусы. |

| Официальный сайт | www.azino777.com |

| Игры казино | Игровые слот автоматы, Видеопокер, Рулетка, Карточные, Игра с живым диллером, Блэкджек |

| Игровой Софт | Microgaming, EGT, Igrosoft, Novomatic, BGaming, Evolution, Aristocrat, Alps, Megajack, Endorphina, Unicum, Amatic, Eguzi, Pragmatic, Game Art, Belatra, Habanero, iSoftBet, SPF, Ainsworth |

| Методы пополнения счета, Мин. 100руб | Neteller, VISA, Skrill, QIWI, MasterCard, Bitcoin, ecoPayz |

| Методы вывода ср-тв, Мин. 150руб | Neteller, VISA, Skrill, QIWI, MasterCard, Bitcoin, ecoPayz |

| Верификация аккаунта при выводе | Да, если сумма вывода превышает 1000 долларов |

| Время вывода ср-тв: | до 72 часов |

| Возможность вывода в выходные дни | Да |

| Лимиты на вывод ср-тв | 20000$ |

| Валюта игрового счета | Российский рубль, Турецкая лира, Доллар США |

| Язык сайта | Английский, Русский, Турецкий, Китайский |

| Игроки не принимаются | Соединенные Штаты Америки, Великобритания, Украина, Турция, Испания, Италия, Франция |

| Имеется возврат части проигранных средств | кешбек до 12% |

| Поощрения | подарки |

| Лицензия | Curacao |

| Тип казино | браузерная/мобильная версии, live-казино |

| Саппорт | support@azino777.com Круглосуточно 24/7 |

| Начало работы казино | 2011 |

При входе в клуб можно ознакомиться с предложениями:

- Игровые автоматы с ответственной игрой

- Способы обхода блокировок

- О заведении

Также имеется раздел «Лицензия», где реально убедиться в наличии официальных документов. Доступ к казино открыт круглосуточно и для его запуска возможно пользоваться любым девайсом. Мобильная версия позволяет открывать любимый клуб со смартфонов, айфонов, планшетов, чтобы в удобном формате наслаждаться игровым досугом. Логотип оформлен в золотом цвете, мгновенно бросается в глаза, подчеркивая главный символ гемблинга — три топора. Играть на сайте Azino777 можно в двух режимах, меняя отдых по желанию, пройдя регистрацию.

Демо-режим — это возможность играть в игровые автоматы казино 777 бесплатно. С помощью этой возможности новые игроки знакомятся с правилами игры не рискуя собственными деньгами. italy777.ru (с).

Этапы регистрации в казино Азино777

Перед настоящими баталиями следует ознакомиться с пользовательским соглашением, которого в дальнейшем нужно придерживаться. Документ является официальным, поэтому нарушения его пунктов караются баном. Стать членом клуба могут только совершеннолетние лица. Запрещается использование нескольких аккаунтов, распространение спама, пользование посторонними программами и чужими данными. В случае выявление любых попыток нарушить договор, доступ к личной странице блокируется вместе со всеми бонусами, выигрышами. Со своей стороны администрация обязуется выполнять пункты, обеспечивая бесперебойное времяпровождение клиентов.

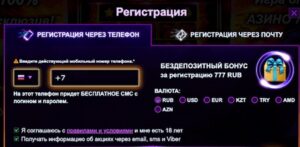

Форма регистрации в Azino777 выглядит так:

Регистрация в Азино777 открывает доступ к самому разнообразному, непредсказуемому и увлекательному азартному миру. Игроки смогут пользоваться всеми услугами, получать частые бонусы, общаться с единомышленниками, играть против настоящих соперников. Пройти регистрацию пользователи могут двумя способами:

- Через электронную почту — введение e-mail, выбор валюты, пароль

- Через социальную сеть — идентификация с помощью любой популярной сети, где имеется личный аккаунт

Подтверждение данных является согласием со всеми условиями и правилами клуба. На электронный адрес или через мессенджер отправляется ссылка, которую игрок должен подтвердить. После этого произойдет автоматическое перенаправление в личный кабинет.

Вход в личный кабинет после регистрации в интернет казино

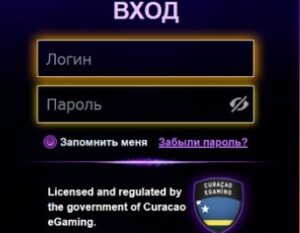

Форма для входа в личный кабинет Азино777 выглядит так:

В личном кабинете игрок должен заполнить подробную информацию о себе, что включает ФИО, дату рождения, страну, город проживания. Данные должны соответствовать действительности. При регистрации клиент выбирает валюту, к чему стоит отнестись серьезно. Разрешается пользоваться рублями, долларами, евро, тенге и другими вариантами. В дальнейшем самостоятельно менять ее не будет возможности. При форс-мажорных обстоятельствах подается заявка, требуемая разрешения администрации. На подтверждение уходит время, что задерживает выводы денег.

«Интернет-казино» — это сайт или специальная программа, которые предоставляют доступ к любым видам азартных игр при наличии подключения к интернету. Википедия

В дальнейшем, чтобы осуществить вход в казино, нужно будет ввести логин и пароль.

Правило неизменно, независимо от вида гаджета или сайта (официального, альтернативного). Каждый постоянный клиент сможет в личном кабинете пользоваться такими привилегиями, как настройки данных, изменение формата, просмотр активных бонусов, получение индивидуальных промокодов. Большую часть информации трудно пропустить, подписавшись на рассылку от заведения.

Рабочее зеркало Azino777 — актуальное на сегодня

Зеркало Азино777 — альтернативный надежный ресурс, полностью копирующие основной сайт, позволяющий пользоваться привычными услугами в случае блокировок официального портала.

Прежде чем приступить к легальному обходу автоблокировок, следует воспользоваться возможностями браузера, изменив его настройки, проверить доступ к интернету. Если данные способы не действует, предлагаются другие:

- Proxy-серверы — путем прописывания вручную в настройках прокси-адреса происходит связь с сервером напрямую.

- VPN — пользователь выбирает IP-адрес, указывая любой регион, позволяющий играть в заведении из любой точки планеты.

Вышеперечисленные методы довольно популярные, но минусом является дополнительные финансовые затраты, регистрация, низкая эффективность в определенных условиях. Для того чтобы бесплатно обеспечить бесперебойный доступ к виртуальному заведению Azino777, админы рекомендуют пользоваться зеркалами.

Игрокам остается лишь найти рабочий домен, что сделать абсолютно несложно, воспользовавшись:

- Поисковиком — активные доменные адреса чаще всего расположены на первых позициях

- Запросом в службу технической поддержки — отправить можно через электронную почту, онлайн-чат

- Закрытыми тематическими страницами — Телеграм-канал, социальные сети

Рабочей альтернативный сайт ничем не отличается от официального.

Игроки могут входить в личный кабинет, введя данные. Гостям клуба предоставляется возможность играть бесплатно в режиме 24/7, а при желании пройти регистрацию в несколько кликов.

Играть онлайн в казино Азино777

Играть в казино Азино 777 могут все клиенты, посетив раздел «Игровой зал». Каталог представлен в двух режимах, поэтому новичкам лучше начать покорение Фортуны с безрисковой игры. Она проходит по традиционному формату, сохраняет правила и условия, заложенные провайдером. Перед стартом пользователям выдается виртуальная валюта, позволяющая проставлять ставки, после чего нужно произвести настройки и кликнуть Start. В процессе можно варьировать шансы на победы, повышать ставки после каждого удачного спина, что поможет понять принцип функционирования и начисления выигрышей. Как только подобных туров станет недостаточно, можно переходить к настоящим — серьезным и увлекательным баталиям.

Для отдыха и осуществления мечты игроки могут пользоваться разделением по направлениям гемблинг-индустрии:

- Настольные — игры, ставшие популярными вместе с первыми оффлайн-казино. В их список входят покер, рулетка, видеопокер, баккара, блэкджек, пасьянс, сик-бо, криббедж, понтун и другие. Данный контент также разделен, поэтому игроки могут выбирать Оазис, Техасский, Холдем, европейскую, французскую, американскую модель колеса, Deuces Wild (Five Hand), Jacks Better с широкими линиями для ставок.

- Слоты — самый большой и популярный раздел среди азартной публики. Ассортимент состоит из разных аппаратов с простыми настройками в несколько кликов, где стоит ожидать совпадения фруктов и семерок. Для сторонников увлекательных историй представлена коллекция с возможностью путешествовать, осваивать новые профессии и места.

- Live-игры — баталии с настоящими крупье, которые в реальном времени находятся в оффлайн-казино или за столами, ожидая начала фееричного тура. Общение, видеообзор обеспечивается с помощью инновационных технологий, отменная связь, отличная графика и звук позволяют участвовать в процессе, играть по полной, чтобы с уверенностью обыграть дилера.

Большая часть аппаратов работает по привычным правилам, общепринятым в гемблинга. Но существует множество небольших нюансов, отличающих контент. Это розыгрыши Джекпота, специальные символы, приумножение доходов, мультиплееры. Именно поэтому важно собрать индивидуальную коллекцию, выбрать которую без рисков можно, запуская демоверсию.

Играть на деньги в казино Азино777

Для игры на деньги доступен весь игровой каталог всем зарегистрированным клиентам. Выбрав игровое направление, следует ознакомиться с правилами, чтобы начать процесс. Описание имеет каждый аппарат, сложностей с освоением правил и возможностей не возникнет. Наполнение порадует отменной графикой, музыкальным сопровождением, щедрыми коэффициентами, бонусными возможностями, высоким показателем RTP. Игроки могут выбирать разные жанровые направления:

- Битва, сражения, противостояния со злыми силами

- Приключения, сказочные, мультяшные, оригинальные истории

- Историческое погружение в Древние цивилизации и прошлое

- Сюжеты известных комиксов, мультфильмов, кинофильмов, телепередач

- Путешествия в разные страны, на морское побережье, необитаемые острова

Лучшие разработчики, такие как Novomatic, Igrosoft, Betsoft, Microgaming, NetEnt, Amatic сделали многое, чтобы игра была увлекательной, честной и прибыльной.

Аппараты работают по системе генератора случайных чисел, поэтому невозможно вычислить комбинация каждого следующего тура. К желаемой победе приведет только активная позиция, умение своевременно остановится и сама госпожа Фортуна.

Мобильная версия виртуального казино Azino777

Сайт адаптируется под любое устройства сразу после запуска. Независимо от размера экрана, пользоваться услугами будет комфортно в любом случае. Мобильная версия имеет множество плюсов, включая доступность, воспроизведение слотов без «зависаний», исключение выкидываний из игры, прочих негативных моментов.

Запустив игровой автомат со смартфона или айфона, она расширится на весь экран. Игроку останется совершить настройки несколькими нажатиями. В мобильной версии легко пользоваться финансовыми транзакциями, особенно в случае привязки личного счета к номеру.

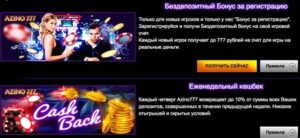

Бонусы и предложения от Азино777

Игра в казино Азино777 сопровождается постоянно поступающими бонусными предложениями. После регистрации будет начислено 777 рублей с отыгрышем в определенном аппарате. Далее каждое пополнение на 1000 рублей сопровождается эксклюзивным предложением. Данный подарок не ограничивается количеством, что мотивирует на активность.

Игроков заведения постоянно будет радовать разнообразными розыгрышами, начислением презента в день рождения, прочим знаменательным датам. По четвергам клиентам на счет возвращается до 10% проигранных средств. При подписке на электронный адрес периодически приходят промокоды индивидуального формата. Турниры станут дополнительным способом удовлетворить азартный амбиции, обойдя соперников.

Пополнение счета и вывод денег

Пополнить или получить деньги можно удобным способом: Visa, MasterCard, Webmoney, Яндекс.Деньги, Сбербанк, Альфа-Банк, криптовалюта, прочее. Транзакции надежно защищены от мошеннических рейдов, поэтому не стоит сомневаться в переводах денежных средств.

Выигрыши будут доставлены гарантированно обладателям в кратчайшие сроки. Первые кэшауты, в зависимости от суммы, могут занять время, учитывая обязательное прохождение верификации. Детальное и корректное введение информации при заполнении личного кабинета значительно сокращают данный процесс.

Отзывы реальных игроков о виртуальном заведении Азино777

Положительные отзывы реальных игроков доказывают, что не зря клуб на протяжении многих лет занимает лидирующие позиции. Пользователи выделяют такие плюсы, как огромное разнообразие аппаратов, игры с живыми дилерами, прозрачность, шикарная бонусная программа, конфиденциальность, безопасность.

Реальные отзывы полезны для новичков, они помогут обидеться в преимуществах, дадут возможность на опыте других пользователей детальнее узнать о бонусах и призовых начислениях. Ознакомиться с комментариями можно на официальном сайте или зеркало Azino777, тематических формах, страницах социальных сетей.

Отличный популярный игровой портал, все слышали клип ак47 про «как поднять бабла», так вот это и есть Азино 777, оно же Азино три топора. Много оригинальный игровых автоматов и современных слотов. Деньги выводят без проблем.

В Azino играю уже больше года, браузерная версия работает даже на мобильном, прикольный сайт. Даже на работе можно покрутить с мобилы слоты, ставки позволяют играть с минимальным депозитом. 500 рублей депнул и играй себе в удовольствие.

Большой выбор слотов и быстрые выплаты, первый вывод был долгим как обычно, но потом автоматически стали приходить выплаты за 15 минут.

Понравилось в казино что быстрая регистрация, и все очень удобно и просто. Сайт прикольный. Выиграть удалось с третьего депозита, внёс в общей сумме около 5 тыс. рублей а выиграл 40,вывели в тот же день.

Азино три топора хорошее казино, честно платит деньги. За регистрацию можно получить 777 бонус в azino но я советую лучше играть на свои.

Много дебилов, которые не умеют играть в казино пишут в интернете свои негативные отзывы о Азино и многих других заведениях, хотя сами ничего не понимают и не разбираются даже как без проблем вывести деньги. Вывести свои деньги и выигранные можно практически из любого онлайн казино и любую сумму, просто нужно играть по правилам и сделать все что необходимо для успешного вывода, вот например верификация аккаунта, многие начинают лить негатив типа их кинули, хотя верификация аккаунта в казино — это обычная процедура, которая легко проводится и нужна она для того, чтобы у вас же со счета казино не увели деньги. Кстати в Азино выводят деньги даже без верификации, на небольшие суммы.

Я очень удовлетворен игрой в Азино777. Посмотрел другие отзывы, было страшно играть, но я был приятно удивлен. Ни один из плохих отзывов не был подтвержден на практике.

Вот моя история:

Зашел, пополнил счет 1000 р. И получил 2000 рублей Бонус, сразу же стал играть не почитав условия предоставления бонусов. Сам виноват, как бы. Причина в том, что при игре тратятся депозитные деньги, а выигрыш падают на бонусный счет. Чтобы отыграть бонус — необходимо играть на сумму равную бонусные плюс депозит помножить на двадцать или 20 как то так. вообщем это сложно. Отказался от бонусных. депнул еще, потом еще и все залил. Через некоторое время решил сыграть еще. Сделал депозит 1200 р. Нажил 2000. Захотел вывести, чтобы проверить, как работает система кешаута. Процедура верификации аккаунта заняла 10 минут, после чего выигрыш без комиссии в течение минут 30 поступил на счет.

На следующий день я начал играть еще и спустил еще 1800. Мой минус составлял 12000 вроде так. Решил играть рисково, пополнил 12000, выиграл 20000! Решил сразу поставить на вывод, но ожидал проблем, начитавшись отзывов. Т. к. мой аккаунт был уже проверен, кешаут занял всего несколько минут, деньги были уже на моем счету! Никакой процент комиссии. Никаких трудностей. Только лишь адреналин и удача! Всем успехов, в казино азино можно играть и выигрывать.

В игровые автоматы 777 я начал играть достаточно давно, даже когда у них ещё не было слотов от нетента. Надолго запомнился тот большой выигрыш, когда я делал депозит в 420$ и занес около $4900. Тогда ещё слабо себе мог представить, что такое вейджеры и открутки. Мой запрос отменили и проинформировали, что нужно открутить вейджер в размере x3 от депа, но если я хочу вывести деньги прямо сейчас, то необходимо подтвердить согласие на комиссию 10%. Я очень хотел скорее получить свои деньги и конечно согласился. Вообщем в целом, то игровые слоты Азино мне понравились, после того ещё не один раз выносил, хоть и не столь крупные суммы. По мне так это лучшее для российских игроков казино.

Сейчас играю только в клубе Азино три семёрки на реальные деньги, когда начинал играть то так же как и все получил тестовый деньги бездеп бонус который составлял 777 рублей, кстати мне тогда сразу удалось выиграть с этого бонусы 10 тысяч, затем сделал первый депозит и вывел выигрыш. После этого выигрыша долго не мог выиграть думал уже как мнгие пишут в интернете что слоты дают только поначалу, но через 2 недели все-таки мне насыпал бук оф ра по ставке 50 публей 50000! Вывели без проблем, запросили конечно верификацию, ну это во все онлайн казино так сейчас это стандартная проверка на дурака, с которой легко справится и запрашивают её только один раз. Сейчас все выплаты обрабатываются примерно за 2 часа.

Последнее время опять уже 2 недели ничего не выводил, слоты не дают видимо, жду опять удачного момента и заноса. Ничего плохого не могу сказать про клуб азино777, деньги выводят, игровых автоматов много, если играть адекватно то все будет нормально. Моя оценка 4,5 из 5.

Я не робот.

Поздравляем!

777 это круто!!!